Abstract

Eighteen months ago, “AI at work” was a thought experiment. Today, AI drives the core of our new SaaS platform. The path wasn’t linear, but every detour and misstep yielded lessons worth sharing.

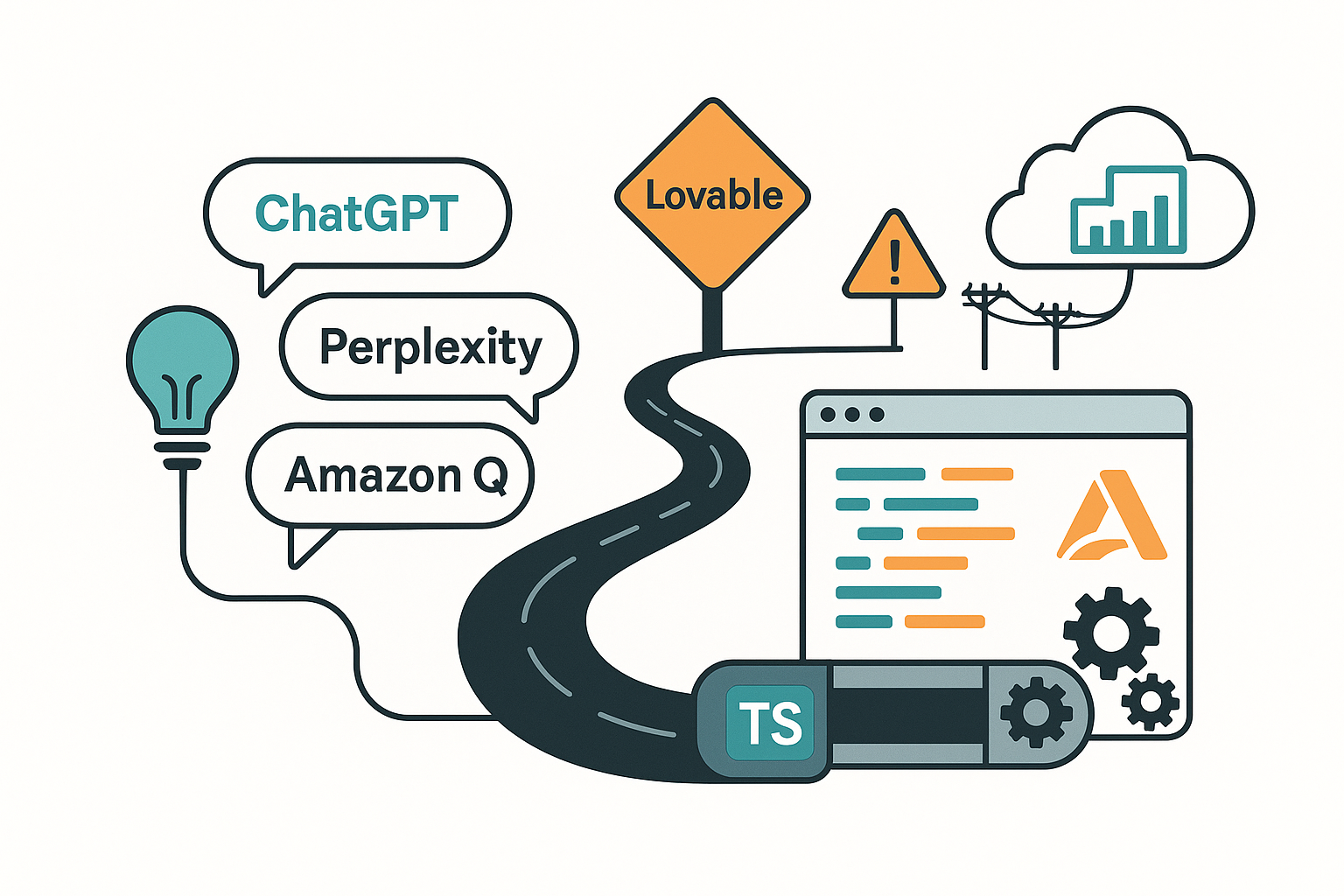

1. Kicking the Tires: Early Exploration

- ChatGPT for rapid ideation

- Perplexity for concise research

- Amazon Q Developer for AWS-specific queries

Individually helpful, yet none could read an entire codebase or grasp our architectural context.

2. The Platform Pivot

A brief experiment with Lovable—a low-code, drag-and-drop AI—proved short-lived. Its closed, hosted model clashed with our AWS-first strategy, so I moved on.

3. Enter Cursor: Real-Code Integration

Cursor, a VS Code-style IDE with AI agents fluent in TypeScript, Serverless, and Amplify Gen 2, became my primary workspace.

What’s working

- Cross-file context (≈ 90 % accuracy and climbing)

- Branch-based environments spun up automatically by our CI/CD pipeline

- Developer sandboxes with live services and data

- Type-checking and linting enforced on every GitHub pull request

What still stings

- Short-term memory lapses—the agent sometimes forgets the larger epic

- Custom CDK inside Amplify: guardrails fight deeper IaC patterns

- Limited support for bleeding-edge AWS features (Amplify Gen 2, Bedrock)

- Manual throttling required to dodge surprise bill spikes

4. Model Roulette: Picking the Right LLM

After cycling through Claude Sonnet 3.5, Gemini 2.5, and now Claude Sonnet 4, one truth stands out: select the model whose vocabulary matches your domain, not merely the benchmark winner.

5. Velocity Overhead: A Solo Productivity Booster

Traditional wisdom: hire an offshore team, spend weeks onboarding, endure timezone lag—all to ship a proof of concept. Instead, I built the PoC solo in three weeks and, within another three months, evolved it into a feature-rich MVP featuring:

- Multi-tenant authentication

- Embedded QuickSight dashboards

- GenAI-generated insights

- A GitHub-integrated pipeline that deploys any feature branch to a live-data sandbox

6. Lessons Learned: Key Takeaways

- Prototype broadly, commit narrowly. Ten tools in development are fine—bring only the best two into production.

- Own your context. IDE-native agents beat chat windows because they “see” real code.

- Keep humans in the loop. AI handles boilerplate; people own architecture, security, and style.

- Think like a user. Build and test often from the user’s perspective and needs.

What’s Next?

Depth over headcount: smoother custom-CDK workflows, tighter Amplify Gen 2 hooks, richer GenAI summaries, and a self-service admin portal. All while staying lean, adding features, and cutting down on 2 a.m. Lambda debugging. Another field report soon!

Matt Pitts, Sr Architect

One response

Looks great! Love the graphics too.